I’m a Ph.D. student at State Key Lab of CAD&CG, Zhejiang University , under the supervision of Prof. Wei Chen. I am also fortunate to work closely with Qian Liu, Tianyu Pang, Haozhe Feng and Minfeng Zhu.

I’m currently insterested in Trustworthy AI and LLMs.

🔥 News

- 2024.05: 🎉🎉 One paper gets accepted by ACL 2024

.

.

📝 Publications

ACL 2024

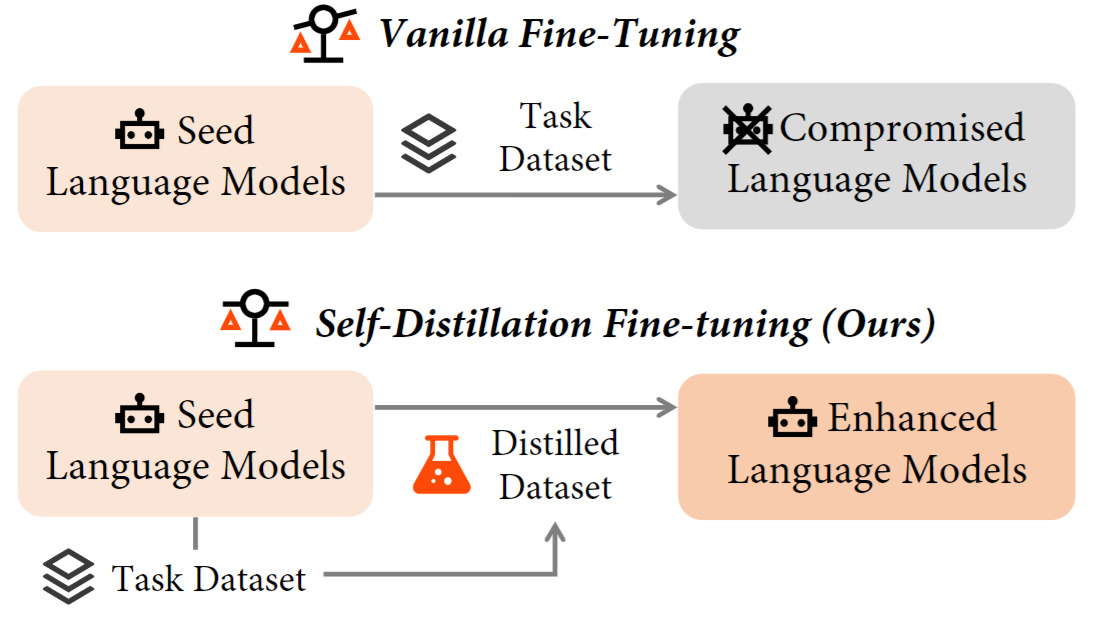

Self-Distillation Bridges Distribution Gap in Language Model Fine-Tuning

Zhaorui Yang, Tianyu Pang, Haozhe Feng, Han Wang, Wei Chen, Minfeng Zhu, Qian Liu

- Fine-tuning LLMs for specific tasks often encounters challenges in balancing performance and preserving general instruction-following abilities. In this work, we posit that the distribution gap between task datasets and the LLMs serves as the primary underlying cause. To address the problem, we introduce Self-Distillation Fine-Tuning (SDFT), a novel approach that bridges the distribution gap by guiding fine-tuning with a distilled dataset generated by the model itself to match its original distribution.

arxiv:2506.02454

PDF |

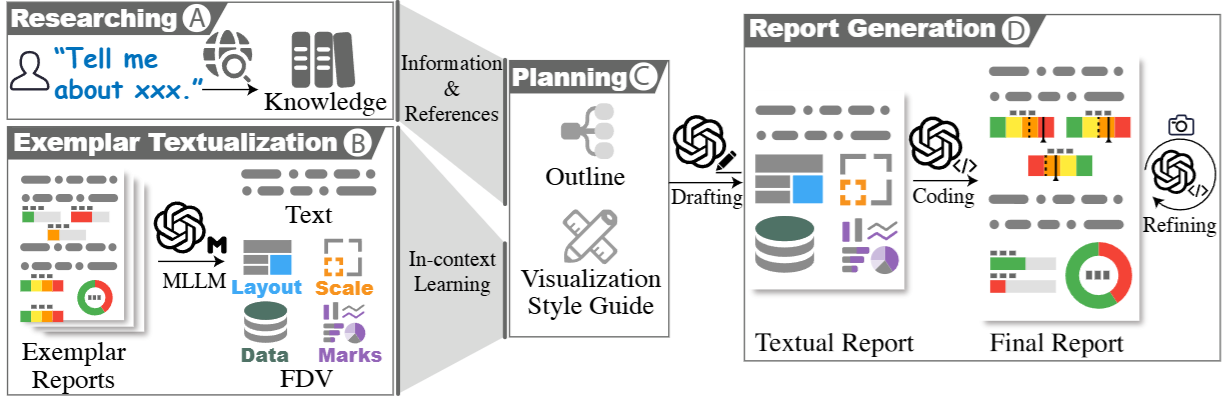

Zhaorui Yang*, Bo Pan*, Han Wang*, Yiyao Wang, Xingyu Liu, Minfeng Zhu, Bo Zhang, Wei Chen

- In this work, we propose Formal Description of Visualization (FDV), a structured textual representation of charts that enables LLMs to learn from and generate diverse, high-quality visualizations. Building on this representation, we introduce Multimodal DeepResearcher, an agentic framework that automatically generates comprehensive multimodal reports from scratch with interleaved texts and visualizations.

arxiv:2304.06627

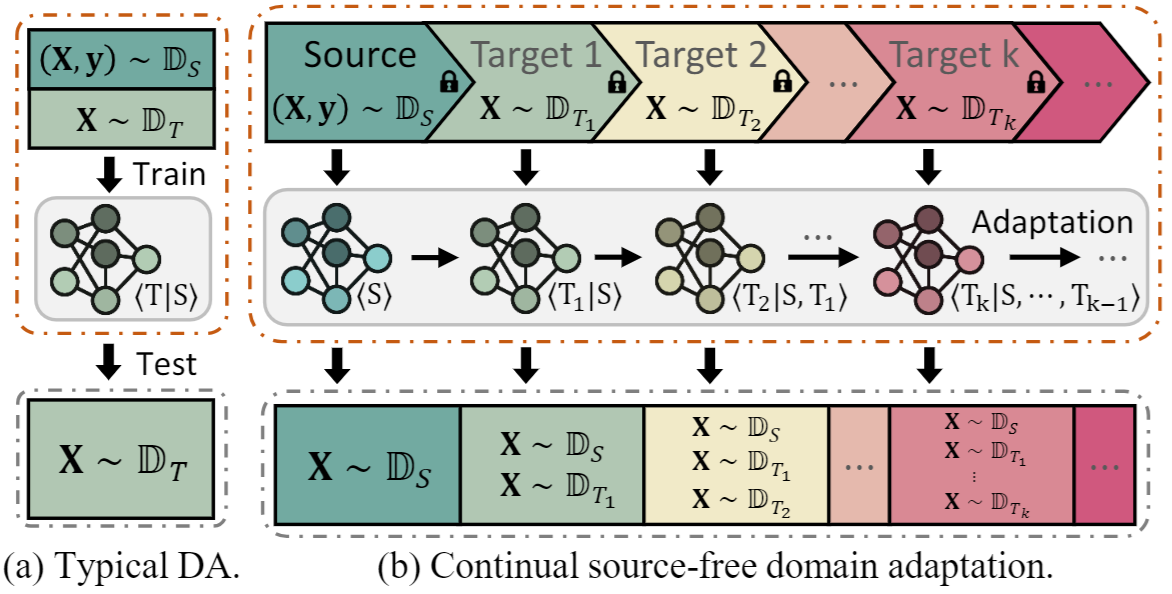

CoSDA: Continual Source-Free Domain Adaptation

PDF |

Haozhe Feng*, Zhaorui Yang*, Hesun Chen*, Tianyu Pang, Chao Du, Minfeng Zhu, Wei Chen, Shuicheng Yan

- In this work, we investigate the mechanism of catastrophic forgetting of previous Source-Free Domain Adaptation (SFDA) approaches. We observe that there is a trade-off between adaptation gain and forgetting loss. Motivated by the findings, we propose CoSDA, which outperforms SOTA approaches in continuous adaptation.

🎖 Honors and Awards

- 2022.12 China National Scholarship (Undergraduate).

- 2021.12 China National Scholarship (Undergraduate).

📖 Education

- 2023.09 - Present

Ph.D. student in Software Engineering at State Key Lab of CAD&CG, Zhejiang University.

- 2019.09 - 2023.06

B.E. in Software Engineering, Xi’an Jiaotong University .

.

💻 Internships

None yet.